Conversational Memory That Works: Window, Summaries, and Pinned Facts

17-12-2025

In real applications using conversational agents, maintaining a coherent conversation over time is a significant technical challenge. From technical support assistants to customer service systems or educational interfaces, users expect the system to "remember" their preferences, recent history, and previous steps. However, achieving this efficiently and securely requires more than just storing a flat history of interactions.

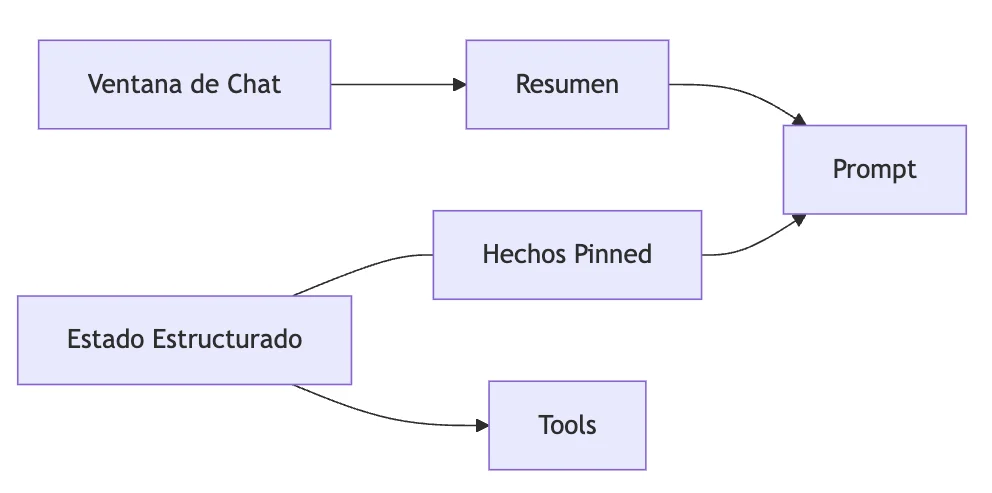

Building robust and consistent multi-turn conversational experiences demands a hybrid memory strategy that combines different mechanisms: windows, summaries, pinned facts, and structured state. This architecture allows models to make better decisions, respect the user's context, and maintain consistency over time.

1. Limitations of Flat History

The approach of keeping only the last N messages has several issues:

- Rapid growth in tokens and cost.

- Risk of losing critical information.

- Lack of structure: the model must "deduce" what is relevant in each turn.

Therefore, it is necessary to go beyond the linear buffer.

2. Window and Incremental Summary

The usual strategy is to maintain a sliding window of the most relevant recent exchanges. For long dialogues, it is complemented with an incremental summary that condenses past events in a way useful for the model.

The summary should not be static: it is updated based on relevant events, state changes, or the appearance of new useful information.

Practical Example: A technical support chatbot progressively summarizes the steps already taken (restarts, executed commands) to avoid repeating suggestions.

3. Structured State and Pinned Facts

Some data should be separated from the conversational flow and stored as structured state. This includes:

- Slots filled by the user (e.g.,

selectedProductId,currentLocation). - Preferences, goals, or explicit constraints.

- Results of previous searches or confirmed actions.

These "pinned facts" act as persistent truths, accessible by the model without needing to repeat them. They can be stored as structured objects, accessible both from the prompt and from external tools.

"Facts that affect decisions → state, not just chat."

Practical Example: A booking assistant can pin as persistent facts the usual departure location, preferred mode of transport, and the maximum acceptable duration of the journey.

4. TTL, Privacy, and Lifecycle Control

It is critical to define expiration policies (TTL) for different types of memory. Not all information should persist indefinitely. Some recommendations:

- Set TTL by data type.

- Encrypt PII and use unique keys per session/conversation.

- Allow the user to review and reset their state.

These measures increase security and improve the user experience.

5. Evaluation and Controlled Degradation

Memory is not infallible. It is necessary to evaluate:

- Accuracy of the summary.

- Completeness of the slots.

- Proper persistence of the pinned facts.

Additionally, an acceptable degradation behavior must be defined: for example, what to do if part of the state is lost or if the summary is ambiguous.

Practical Example: In an educational system, if the student's progress summary is incomplete, the system can ask again which topic they wish to continue before making recommendations.

Memory Architecture Diagram

Example Table: Classifying Data

| Data | Where to Store | TTL | Sensitive |

|---|---|---|---|

selectedProductId | Structured State | 1h | No |

| Language Preference | Pinned Facts | Session | Yes |

| Last Messages | Chat Window | Dynamic | Partial |

| Dialogue Summary | Incremental Summary | Session | No |

Technical Checklist

- Token limit per window.

- Policy on when and how to summarize.

- Encryption of sensitive data.

- Use of unique keys per conversation.

Frequently Asked Questions

-

When should summarization occur?

- When a length threshold is exceeded or the conversation goal changes.

-

What if the user's preferences change?

- The previous data should be invalidated and the state updated securely.

Conclusion

An effective conversational memory strategy is key to building intelligent systems that adapt to the user's context without compromising efficiency or privacy. Dynamic windows, incremental summaries, structured state, and pinned facts are complementary components that, when well implemented, allow for maintaining natural and consistent interactions over time.

At Lean Mind, we work with these types of patterns to help our clients build robust, testable conversational solutions aligned with sustainable development principles.